Nothing To See Here. Just a Bunch Of Us Agreeing a 3 Basic Deepseek Ru…

페이지 정보

작성자 Tabitha Bohr 작성일25-02-08 09:17 조회6회 댓글0건관련링크

본문

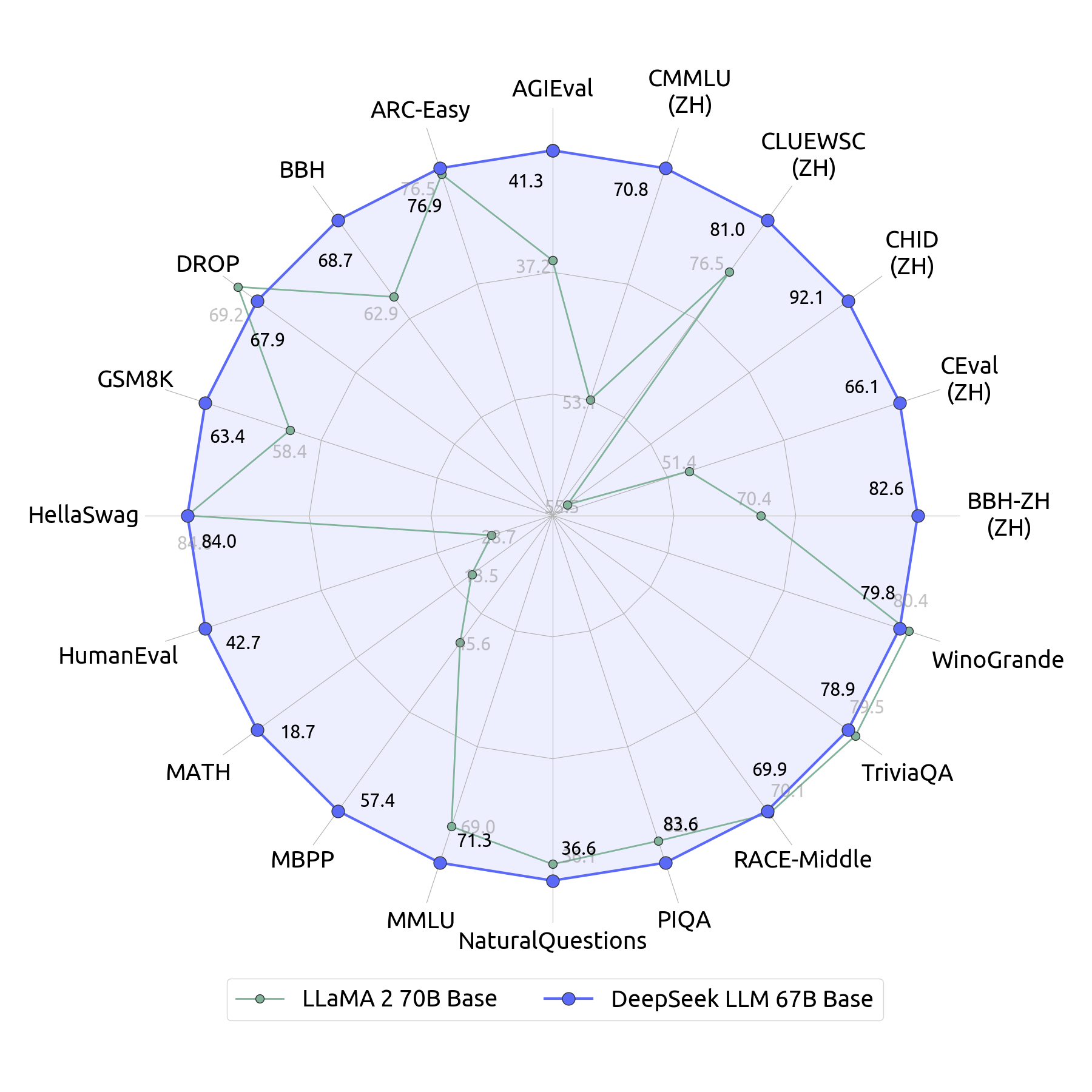

Chinese technology start-up DeepSeek has taken the tech world by storm with the discharge of two massive language models (LLMs) that rival the performance of the dominant tools developed by US tech giants - however constructed with a fraction of the associated fee and computing power. On 29 January, tech behemoth Alibaba released its most superior LLM up to now, Qwen2.5-Max, which the corporate says outperforms DeepSeek's V3, another LLM that the agency launched in December. Jacob Feldgoise, who studies AI expertise in China at the CSET, says national insurance policies that promote a model growth ecosystem for AI can have helped firms comparable to DeepSeek, in terms of attracting both funding and expertise. DeepSeek probably benefited from the government’s funding in AI education and expertise development, which includes quite a few scholarships, analysis grants and partnerships between academia and business, says Marina Zhang, a science-coverage researcher on the University of Technology Sydney in Australia who focuses on innovation in China.

Chinese technology start-up DeepSeek has taken the tech world by storm with the discharge of two massive language models (LLMs) that rival the performance of the dominant tools developed by US tech giants - however constructed with a fraction of the associated fee and computing power. On 29 January, tech behemoth Alibaba released its most superior LLM up to now, Qwen2.5-Max, which the corporate says outperforms DeepSeek's V3, another LLM that the agency launched in December. Jacob Feldgoise, who studies AI expertise in China at the CSET, says national insurance policies that promote a model growth ecosystem for AI can have helped firms comparable to DeepSeek, in terms of attracting both funding and expertise. DeepSeek probably benefited from the government’s funding in AI education and expertise development, which includes quite a few scholarships, analysis grants and partnerships between academia and business, says Marina Zhang, a science-coverage researcher on the University of Technology Sydney in Australia who focuses on innovation in China.

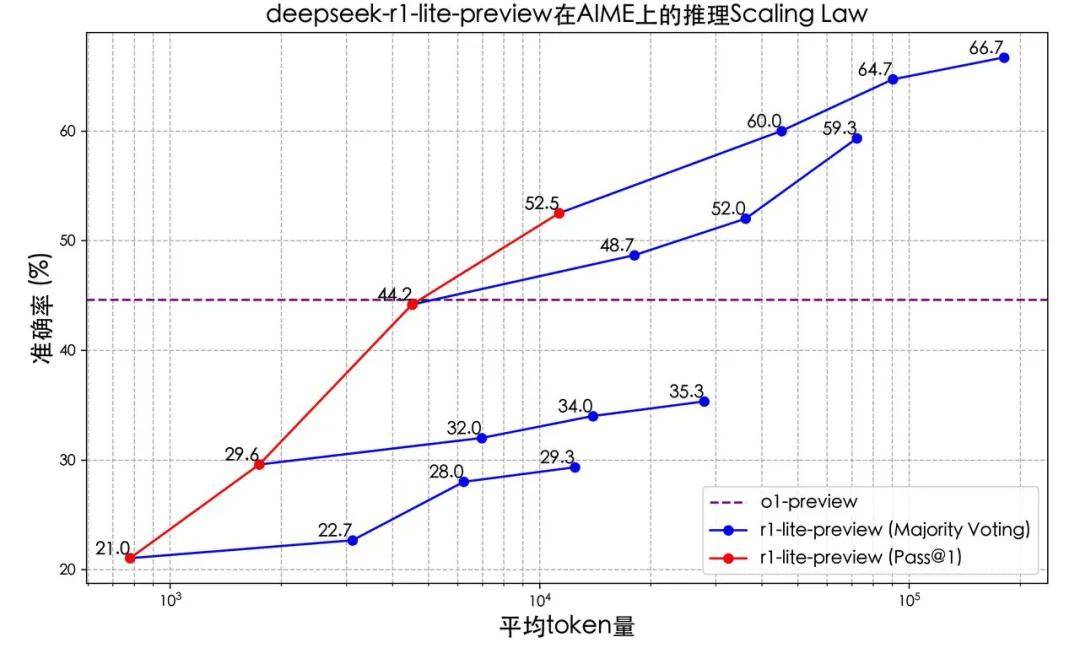

Investigating the system's transfer learning capabilities could be an fascinating area of future research. One area the place DeepSeek really shines is in logical reasoning. DeepSeek open-sourced DeepSeek-R1, an LLM advantageous-tuned with reinforcement studying (RL) to enhance reasoning functionality. And last week, Moonshot AI and ByteDance released new reasoning models, Kimi 1.5 and 1.5-pro, which the companies claim can outperform o1 on some benchmark tests. And earlier this week, DeepSeek launched one other model, known as Janus-Pro-7B, which may generate images from textual content prompts very like OpenAI’s DALL-E 3 and Stable Diffusion, made by Stability AI in London. We offer varied sizes of the code model, starting from 1B to 33B variations. Explore all variations of the mannequin, their file formats like GGML, GPTQ, and HF, and perceive the hardware requirements for local inference. Its newest model, DeepSeek-V3, boasts an eye fixed-popping 671 billion parameters whereas costing just 1/30th of OpenAI’s API pricing - only $2.19 per million tokens in comparison with $60.00. OpenAgents enables basic customers to interact with agent functionalities by means of a web person in- terface optimized for swift responses and common failures whereas providing develop- ers and researchers a seamless deployment experience on local setups, providing a basis for crafting progressive language agents and facilitating actual-world evaluations.

Investigating the system's transfer learning capabilities could be an fascinating area of future research. One area the place DeepSeek really shines is in logical reasoning. DeepSeek open-sourced DeepSeek-R1, an LLM advantageous-tuned with reinforcement studying (RL) to enhance reasoning functionality. And last week, Moonshot AI and ByteDance released new reasoning models, Kimi 1.5 and 1.5-pro, which the companies claim can outperform o1 on some benchmark tests. And earlier this week, DeepSeek launched one other model, known as Janus-Pro-7B, which may generate images from textual content prompts very like OpenAI’s DALL-E 3 and Stable Diffusion, made by Stability AI in London. We offer varied sizes of the code model, starting from 1B to 33B variations. Explore all variations of the mannequin, their file formats like GGML, GPTQ, and HF, and perceive the hardware requirements for local inference. Its newest model, DeepSeek-V3, boasts an eye fixed-popping 671 billion parameters whereas costing just 1/30th of OpenAI’s API pricing - only $2.19 per million tokens in comparison with $60.00. OpenAgents enables basic customers to interact with agent functionalities by means of a web person in- terface optimized for swift responses and common failures whereas providing develop- ers and researchers a seamless deployment experience on local setups, providing a basis for crafting progressive language agents and facilitating actual-world evaluations.

In that year, China supplied virtually half of the world’s main AI researchers, whereas the United States accounted for just 18%, in line with the think tank MacroPolo in Chicago, Illinois. Like Shawn Wang and i were at a hackathon at OpenAI perhaps a year and a half in the past, and they'd host an event in their office. "Our work demonstrates that, with rigorous evaluation mechanisms like Lean, it's feasible to synthesize massive-scale, high-high quality knowledge. The AI model now holds a dubious report as the fastest-rising to face widespread bans, with establishments and authorities overtly questioning its compliance with international information privacy laws. Given China’s historical past of data acquisition practices, leveraging such strategies would align with its strategic goals. Some members of the company’s management team are younger than 35 years previous and have grown up witnessing China’s rise as a tech superpower, says Zhang. For example, she adds, state-backed initiatives such as the National Engineering Laboratory for Deep Learning Technology and Application, which is led by tech firm Baidu in Beijing, have trained thousands of AI specialists. It was inevitable that a company comparable to DeepSeek would emerge in China, given the massive enterprise-capital investment in companies growing LLMs and the numerous individuals who hold doctorates in science, know-how, engineering or arithmetic fields, together with AI, says Yunji Chen, a computer scientist engaged on AI chips at the Institute of Computing Technology of the Chinese Academy of Sciences in Beijing.

Within the Interesting Engineering publication, AI enthusiast Suhnylla Kler conveys the software program and architectural elegance inside DeepSeek’s model. Kler comes to an analogous conclusion about constraints: "DeepSeek V3 was able to maintain their AI mannequin running effectively, showing that innovation isn’t just about having the most effective instruments but also about using what you've in the smartest approach attainable." She compares it to cooking a giant meal in a kitchen with fewer appliances than you’d like. After having 2T more tokens than both. But for the GGML / GGUF format, it is more about having sufficient RAM. These large language fashions need to load fully into RAM or VRAM every time they generate a brand new token (piece of text). To attain a higher inference speed, say sixteen tokens per second, you would need extra bandwidth. Suppose your have Ryzen 5 5600X processor and DDR4-3200 RAM with theoretical max bandwidth of 50 GBps.

If you have any inquiries pertaining to where and how to use شات ديب سيك, you can speak to us at the web page.

Warning: Use of undefined constant php - assumed 'php' (this will throw an Error in a future version of PHP) in /data/www/kacu.hbni.co.kr/dev/skin/board/basic/view.skin.php on line 152

댓글목록

등록된 댓글이 없습니다.